Introducing RealityCheck

Introducing RealityCheck: Zero Trust Video-Conferencing Solution to Combat Deepfakes and Generative AI

In today's rapidly advancing technological landscape, the rise of AI deception has become a significant threat. Noteworthy incidents underscore the gravity of this issue, such as:

- The fraudulent transfer of $25 million during a video call where a finance worker was duped by deepfakes impersonating multiple executives.

- The U.S. Healthcare and Public Health sectors have experienced breaches where IT help desks received deepfake audio calls, leading to unauthorized access to systems.

- Ferrari’s CEO was impersonated in attempt to scam the company

- Even cybersecurity strongholds like KnowBe4 have been duped by deepfakes during the onboarding process, allowing a North Korean attacker to download malware on a company-sponsored device.

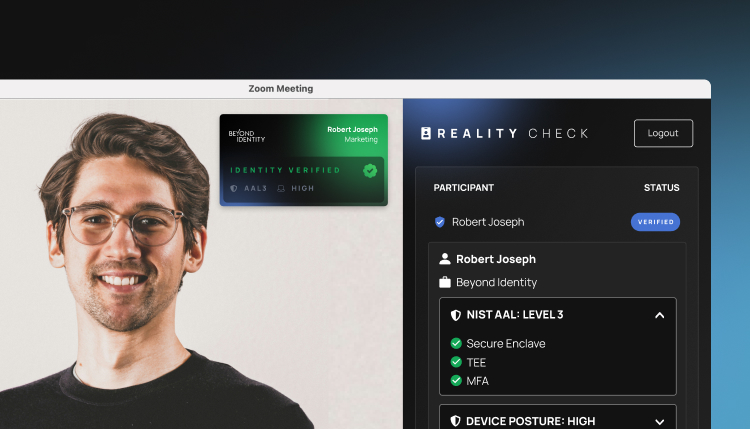

RealityCheck is Beyond Identity’s newest product, released today, that uses deterministic signals to prevent deepfake and AI impersonation attacks by certifying the authenticity of call participants and removing unauthorized users from high-risk conference calls.

Learn more about the product below and see it in action today.

Existing Solutions Fall Short

Current strategies for combating AI deception, including deepfake detection tools and end-user training, are largely probabilistic and lack definitive guarantees.

Deepfake detection technology and deepfakes are perpetually caught in an arms race, seesawing between which one is on top. Given that the same models used to detect deepfakes can also be employed to create them, bad actors can keep up with or even surpass detection efforts in this ongoing AI conflict.

Additionally, end-user training is often ineffective, as it struggles to keep pace with the rapidly changing threat landscape and places the burden of ensuring authenticity on the end user.

Trust users on high-risk video conferencing calls

When addressing AI deception in digital communication, rather than depending on probabilistic signals or the judgment of end users, RealityCheck adopts a zero-trust approach. This approach leverages deterministic signals to help establish trust.

Instead of asking, “how can a user tell what’s a deepfake or not”, a viable solution must consider these questions:

- Can you trust the user behind the device?

- What claims can the device itself make about its provenance and risk posture?

- How do we establish and verify this trust?

These questions are crucial for developing a reliable framework to ensure the authenticity and security of digital interactions.

RealityCheck overlays a badge of authenticity over Zoom camera streams and displays a side panel with additional data about device and user risk. Continuous rechecking aims to provide the meeting participants with knowledge on the identities of the call and the security posture of the devices, fostering trust among the participants.

Get started with RealityCheck today

RealityCheck is currently a free add-on with any of Beyond Identity’s paid plans. For more information and to see an interactive demo, please visit the RealityCheck product page.

In the future, we’re aiming to provide authentication assurance across various collaboration platforms, including Zoom, Google Meet, Microsoft Teams, and other communication channels such as email. If you have a specific feature that a Generative AI Prevention solution can help your team with, share your feedback here.

Introducing RealityCheck: Zero Trust Video-Conferencing Solution to Combat Deepfakes and Generative AI

In today's rapidly advancing technological landscape, the rise of AI deception has become a significant threat. Noteworthy incidents underscore the gravity of this issue, such as:

- The fraudulent transfer of $25 million during a video call where a finance worker was duped by deepfakes impersonating multiple executives.

- The U.S. Healthcare and Public Health sectors have experienced breaches where IT help desks received deepfake audio calls, leading to unauthorized access to systems.

- Ferrari’s CEO was impersonated in attempt to scam the company

- Even cybersecurity strongholds like KnowBe4 have been duped by deepfakes during the onboarding process, allowing a North Korean attacker to download malware on a company-sponsored device.

RealityCheck is Beyond Identity’s newest product, released today, that uses deterministic signals to prevent deepfake and AI impersonation attacks by certifying the authenticity of call participants and removing unauthorized users from high-risk conference calls.

Learn more about the product below and see it in action today.

Existing Solutions Fall Short

Current strategies for combating AI deception, including deepfake detection tools and end-user training, are largely probabilistic and lack definitive guarantees.

Deepfake detection technology and deepfakes are perpetually caught in an arms race, seesawing between which one is on top. Given that the same models used to detect deepfakes can also be employed to create them, bad actors can keep up with or even surpass detection efforts in this ongoing AI conflict.

Additionally, end-user training is often ineffective, as it struggles to keep pace with the rapidly changing threat landscape and places the burden of ensuring authenticity on the end user.

Trust users on high-risk video conferencing calls

When addressing AI deception in digital communication, rather than depending on probabilistic signals or the judgment of end users, RealityCheck adopts a zero-trust approach. This approach leverages deterministic signals to help establish trust.

Instead of asking, “how can a user tell what’s a deepfake or not”, a viable solution must consider these questions:

- Can you trust the user behind the device?

- What claims can the device itself make about its provenance and risk posture?

- How do we establish and verify this trust?

These questions are crucial for developing a reliable framework to ensure the authenticity and security of digital interactions.

RealityCheck overlays a badge of authenticity over Zoom camera streams and displays a side panel with additional data about device and user risk. Continuous rechecking aims to provide the meeting participants with knowledge on the identities of the call and the security posture of the devices, fostering trust among the participants.

Get started with RealityCheck today

RealityCheck is currently a free add-on with any of Beyond Identity’s paid plans. For more information and to see an interactive demo, please visit the RealityCheck product page.

In the future, we’re aiming to provide authentication assurance across various collaboration platforms, including Zoom, Google Meet, Microsoft Teams, and other communication channels such as email. If you have a specific feature that a Generative AI Prevention solution can help your team with, share your feedback here.

Introducing RealityCheck: Zero Trust Video-Conferencing Solution to Combat Deepfakes and Generative AI

In today's rapidly advancing technological landscape, the rise of AI deception has become a significant threat. Noteworthy incidents underscore the gravity of this issue, such as:

- The fraudulent transfer of $25 million during a video call where a finance worker was duped by deepfakes impersonating multiple executives.

- The U.S. Healthcare and Public Health sectors have experienced breaches where IT help desks received deepfake audio calls, leading to unauthorized access to systems.

- Ferrari’s CEO was impersonated in attempt to scam the company

- Even cybersecurity strongholds like KnowBe4 have been duped by deepfakes during the onboarding process, allowing a North Korean attacker to download malware on a company-sponsored device.

RealityCheck is Beyond Identity’s newest product, released today, that uses deterministic signals to prevent deepfake and AI impersonation attacks by certifying the authenticity of call participants and removing unauthorized users from high-risk conference calls.

Learn more about the product below and see it in action today.

Existing Solutions Fall Short

Current strategies for combating AI deception, including deepfake detection tools and end-user training, are largely probabilistic and lack definitive guarantees.

Deepfake detection technology and deepfakes are perpetually caught in an arms race, seesawing between which one is on top. Given that the same models used to detect deepfakes can also be employed to create them, bad actors can keep up with or even surpass detection efforts in this ongoing AI conflict.

Additionally, end-user training is often ineffective, as it struggles to keep pace with the rapidly changing threat landscape and places the burden of ensuring authenticity on the end user.

Trust users on high-risk video conferencing calls

When addressing AI deception in digital communication, rather than depending on probabilistic signals or the judgment of end users, RealityCheck adopts a zero-trust approach. This approach leverages deterministic signals to help establish trust.

Instead of asking, “how can a user tell what’s a deepfake or not”, a viable solution must consider these questions:

- Can you trust the user behind the device?

- What claims can the device itself make about its provenance and risk posture?

- How do we establish and verify this trust?

These questions are crucial for developing a reliable framework to ensure the authenticity and security of digital interactions.

RealityCheck overlays a badge of authenticity over Zoom camera streams and displays a side panel with additional data about device and user risk. Continuous rechecking aims to provide the meeting participants with knowledge on the identities of the call and the security posture of the devices, fostering trust among the participants.

Get started with RealityCheck today

RealityCheck is currently a free add-on with any of Beyond Identity’s paid plans. For more information and to see an interactive demo, please visit the RealityCheck product page.

In the future, we’re aiming to provide authentication assurance across various collaboration platforms, including Zoom, Google Meet, Microsoft Teams, and other communication channels such as email. If you have a specific feature that a Generative AI Prevention solution can help your team with, share your feedback here.

.jpg)

.jpg)

.jpg)

.jpg)

.jpg)

.jpg)

.jpg)

.jpeg)

.png)